Analytics and In-Memory Databases Are Changing Data Centers

Posted: Tue Jan 04, 2011 11:39 am

ECRM Guide article

Interesting article... not something we all probably didn't see happening though.

Power and Cooling, Memory Needs Drive Change

The trend toward in-memory analytics is being driven in part by power and cooling and memory demands.

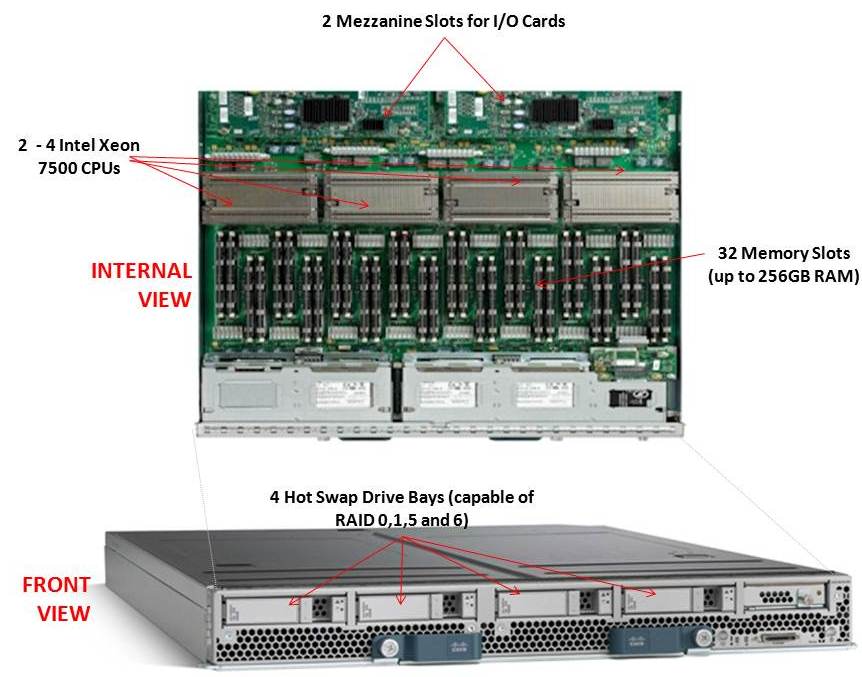

Power and cooling needs expand as more and more servers are required as the database grows, as power and cooling requirements grow with the server count. Larger and larger amounts of memory are also needed, given the number of cores and the available processing power for each CPU. And the more memory you require, the more power and cooling you need. The number of watts for DDR3 with even 4 DIMM slots can exceed the number of watts required by a single processor, depending on the processor. Using more than 4 DIMM slots per processor is becoming more common, and of course the core count is growing with no end in sight. If you make the assumption that you need 2 GB of memory per core for efficient analytics processing, a 12 core CPU will need 24 GB of memory. The number of flash drives or PCIe flash devices needed to achieve the performance of main memory is not cost-effective, given the number of PCIe buses needed, the cost of the devices and the complexity of using them compared to just using memory.

I/O Complexity Slows the Data Path

Another reason that many data analytics programs have moved to memory-only methods is because of I/O complexity and latency.

Historically I/O has been thousands of times slower than memory in both latency and performance. Even with flash devices, I/O latency is milliseconds of latency compared to microseconds for main memory. Even if flash device latency improves, it still has to go through the OS and PCIe bus compared to a direct hardware translation to address memory.

Knowledge of how to do I/O efficiently is limited because I/O programming is not taught in schools. Other than using the C library fopen/fread/fwrite, not much more is taught from what I have seen, and even if high-performance, low-latency I/O programming were taught, there are still significant limits to performance because of minimal interfaces.

The cost of I/O in terms of operating system interrupt overhead, latency and the path through the I/O stack is another limitation. Whenever I/O is done, the operating system must be called to do the I/O. This has significant overhead and latency and cannot be eliminated given current operating system implementations.

The problem is that the I/O stack has not changed much at all in 30 years. This is what the data path looks like currently:

There are no major changes on the horizon for the I/O stack, which means that any application still has to go through interrupting the operating system, the file system POSIX layer and the SCSI/SATA driver. There are some flash PCIe vendors that have developed changes to the I/O stack, but they are proprietary. I see nothing on the standards horizon that looks like a proposal, much less something that all of the vendors can agree upon. The standards process is controlled by a myriad of different groups, so I have little hope of change, which is why storage will be relegated to checkpointing and restarting these in-memory applications. It is clear that data analytics cannot be efficiently accomplished using disk drives, even flash drives, as you will have expensive CPUs sitting idle.

Future Analytics Architectures

As data analytics demand more and more memory because of the increase in CPU core counts, new memory technologies will need to be developed and brought to market to address the requirements. Things like double-stacked DDR3, phase change memory (PCM), memristor and other technologies are going to be required to meet the needs of this market. Data analytics is a memory-intensive application, and even high-performance storage does not have enough bandwidth to address the requirements. Combine that with the fact that data analytics applications are latency intolerant and you have a memory-based application, as the storage stack is high latency and OS hungry compared to memory, even slower memory such as PCM or memristor, and this latency cannot be changed.

Interesting article... not something we all probably didn't see happening though.